Martin Scorsese Wants Leonardo DiCaprio to Play Frank Sinatra In His New Biopic Movie

Read More

It is one of the highest royal awards in the United Kingdom.

Read More

If you’re looking for motivation to learn something, you’re in the right place!

Read More

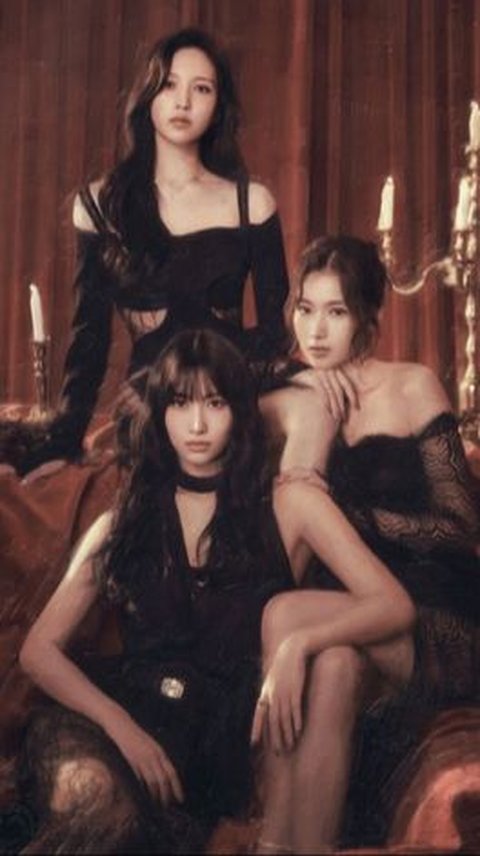

Some school-age girls are amazed watching eight female dancers singing girl group MiSaMo.

Read More

Entering motherhood is a journey filled with wonder, excitement, and anticipation.

Read More

Many moms confessed that they protect their children from harsh words and adult themes, such as sex, violence, dangerous things, and death.

Read More

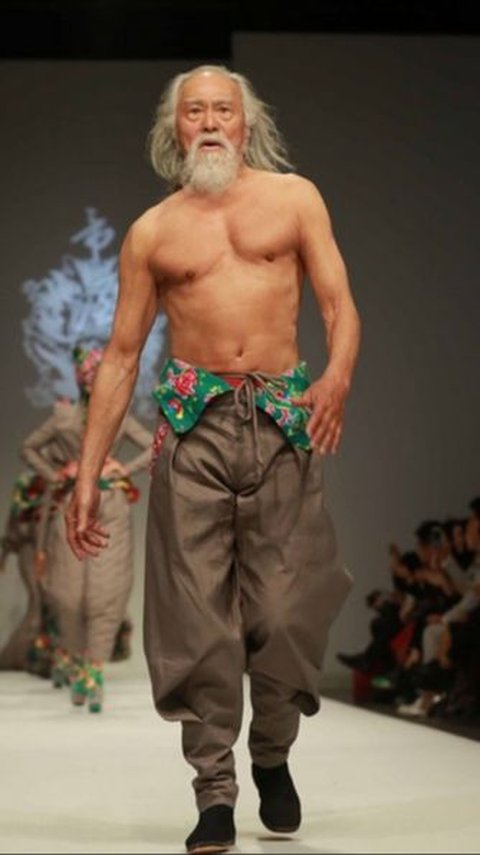

Wang Deshun is an 88-year-old actor and fashion model who became famous in 2015.

Read More

Venice is actually implementing a plan to charge daily visitors an entrance fee.

Read More

Sassy quotes can range from humorous sentences to thought-provoking remarks. However, they all have their own uniqueness and a touch of bravery.

Read More

If you're feeling brave and looking for a spooky adventure, these haunted places in Leeds will give you chills.

Read More

Chinese youth are competing to wear ugly outfits to the office.

Read More

Amidst the struggle, there are addiction quotes that resonate with the pain of addiction. These addiction quotes serve as a guiding light.

Read More

You can find many things at the Mercado Republica de San Luis Potosi market in Mexico.

Read More

Madison Beer became famous in 2012 when Justin Bieber shared one of her YouTube covers.

Read More

Let them guide you to a deeper understanding of trust in your relationships.

Read More

Finding the right horoscope match for a Libra can lead to a fulfilling and loving relationship.

Read More

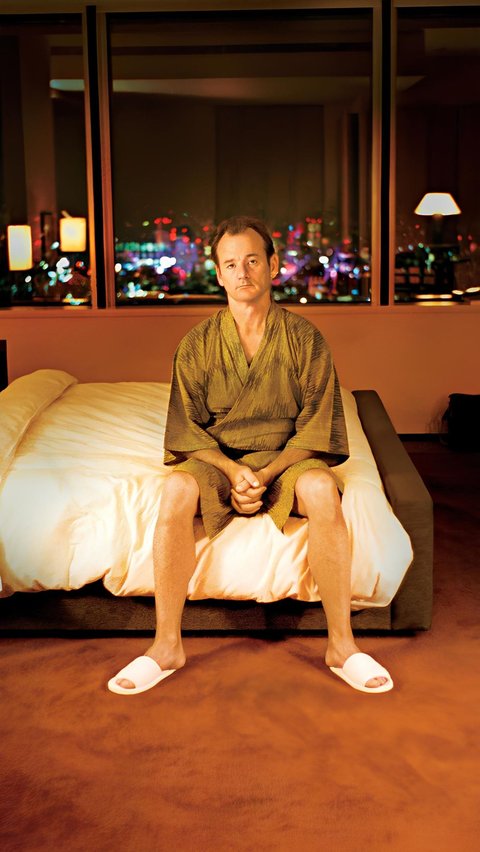

These movies about loneliness offer poignant portrayals of loneliness in various forms.

Read More

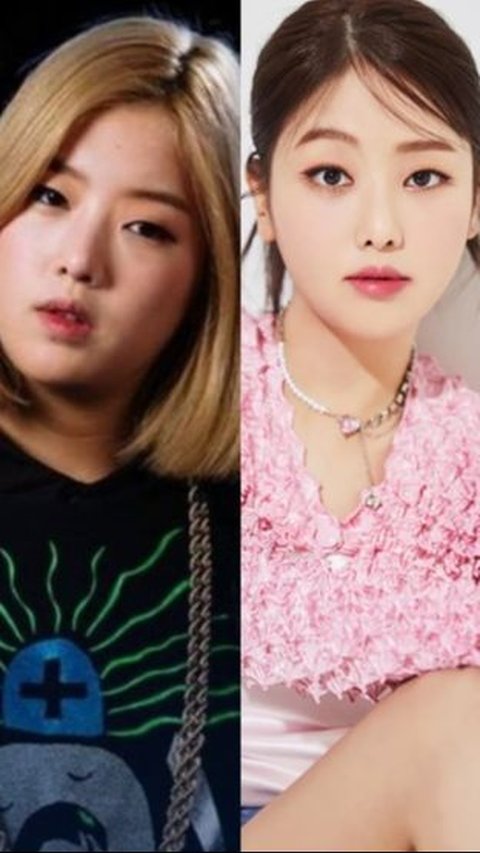

Coming back with the latest single, Kisum has amazed many people with her new appearance.

Read More

Let's explore these best cities to live in the USA and find your perfect place to call home!

Read More

The rapper shows his interest to buy one of the most popular platform via thread on X.

Read More

These quotes will help you navigate life's journey.

Read More

In fact, this announcement can be seen in users flipside accounts.

Read More

These Christian birthday wishes are more than words—they are heartfelt prayers for God's blessings upon your friend on their special day.

Read More

Apple will include original calculator app in the upcoming iPad product.

Read More

A woman in South Korea lost more than $50,000 to a scam.

Read More