A woman in South Korea lost more than $50,000 to a scam.

Read More

Let’s explore this world of stupid quotes and laugh. Maybe there is hidden wisdom to teach us.

Read More

The couple is now encouraging others to do the same thing as them.

Read More

Feminists fight for a world with equal rights, opportunities, and respect.

Read More

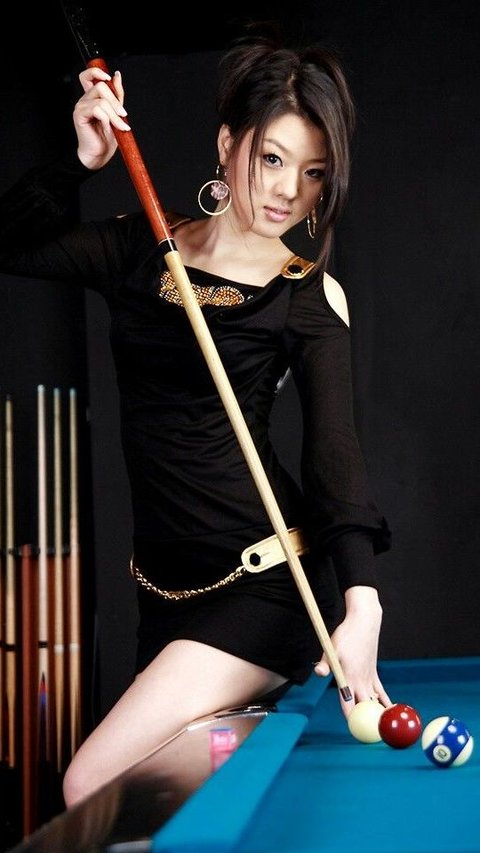

These hottest and most attractive female pool players prove that beauty and skill can coexist globally.

Read More

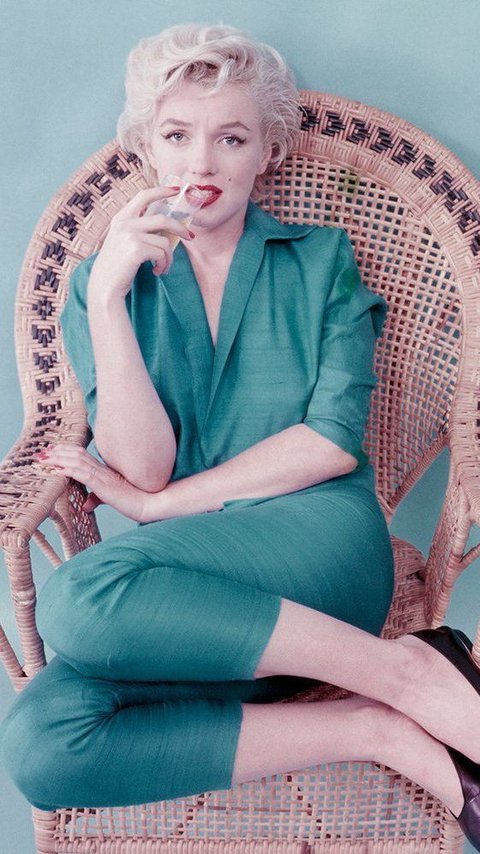

She loves to look glamorous and is famous for her beauty.

Read More

People close to Zendaya and Tom Holland say that marriage is a topic of conversation for them.

Read More

Thursdays offer a perfect opportunity to pause and reflect on the blessings surrounding us.

Read More

Doctors believe that she may have borderline personality disorde.

Read More

FinanceBuzz announced that it is celebrating the upcoming Star Wars Day.

Read More

An extremely rare blind vole has been spotted and photographed in the Australian outback.

Read More

Are you a dog lover or just looking for some good laughs? Here are some paw-some dog jokes that will brighten your day.

Read More

Anne Hathaway opens up about the "disgusting" chemistry test she went through before starring in romantic movies in the 2000s.

Read More

Whether you believe in ghosts or not, these haunted places in Cardiff, Wales, have an eerie atmosphere and feel of the past.

Read More

Quotes about fall evoke the feeling of wearing cozy sweaters and seeing colorful leaves.

Read More

Here are some of the hottest and most talented female racing drivers that charmed fans both on and off the circuit.

Read More

Matty Healy responded to his ex-Taylor Swift's new album, The Tortured Poets Department.

Read More

Taylor Swift lyrics quotes can remind you to embrace change, be fearless, and never let anyone dull your sparkle.

Read More

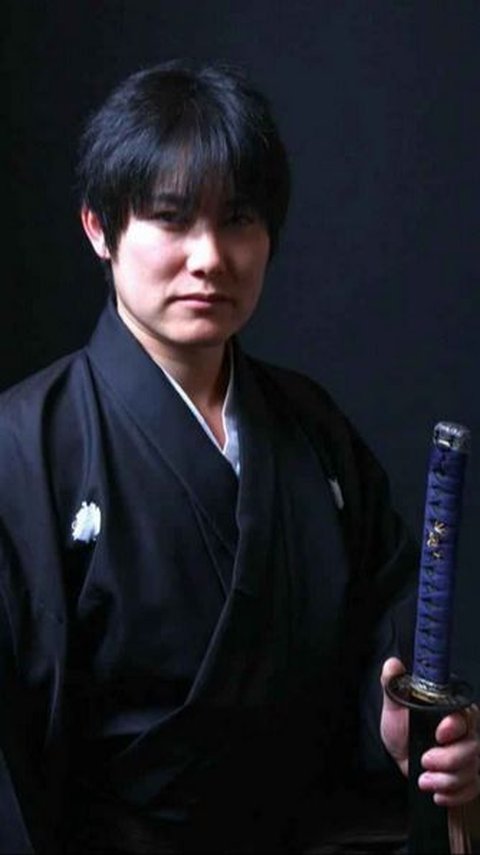

He is the Guinness World Record holder for the most sword slashes in one minute.

Read More

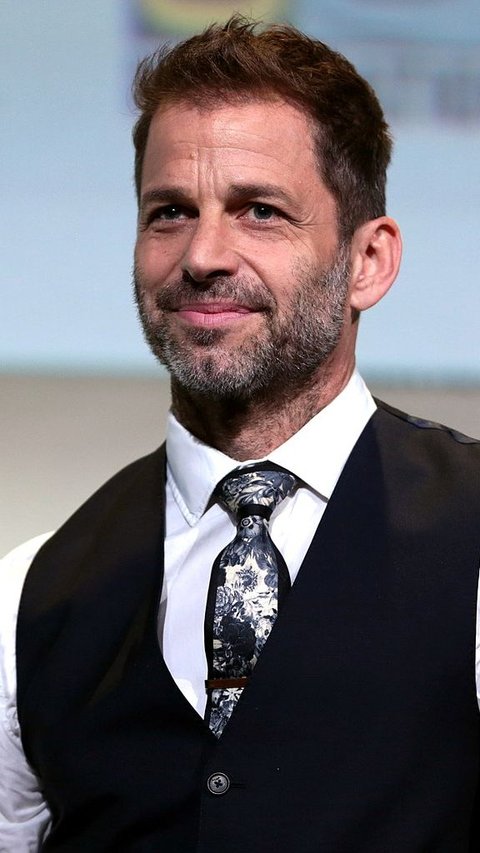

Zack Snyder is an American film director known for his works on comic book movies and superhero films. Here are the top 6 Zack Snyder movies you should watch.

Read More

Ma Dong Seok, also known as Don Lee, is a prolific actor known for his captivating performances, particularly in action films.

Read More

Finding your best soulmate as a Scorpio may need patience and openness, but these five zodiac signs could be your perfect match.

Read More

Here are the best places to visit in August in India for those who want to avoid the crowd during the peak season.

Read More

After winning Miss Buenos Aires, she went viral on Latino social media.

Read More

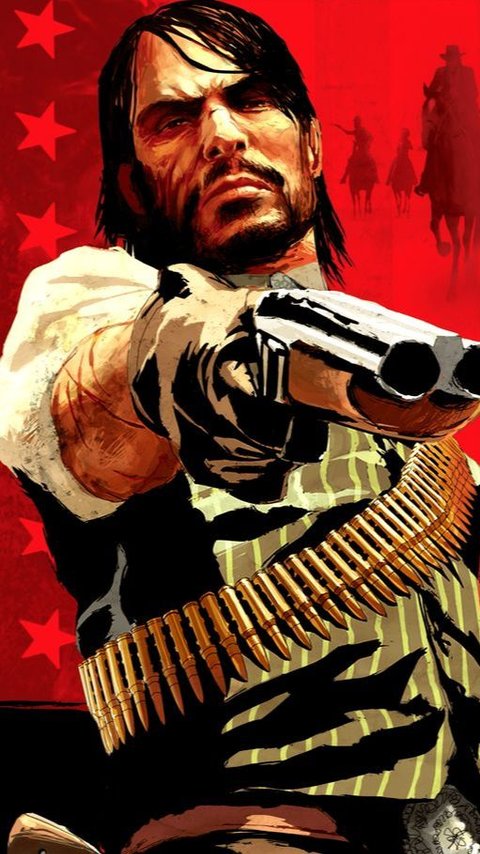

First released in 2006, the PlayStation 3 has several video game titles that have stunning graphics even today.

Read More